Comprehensive Guide to Decoding Parameters and Hyperparameters in Large Language Models (LLMs)

Table of contents

- [LLMs, NLP, MachineLearning, DeepLearning, Hyperparameters, DecodingParameters, AI, Optimization]

- 📌 Comprehensive Guide to Decoding Parameters and Hyperparameters in Large Language Models (LLMs)

- 📖 Introduction

- 🎯 Decoding Parameters: Shaping AI-Generated Text

- ⚡ Hyperparameters: Optimizing Model Training

- 🔥 Final Thoughts: Mastering LLM Tuning

[LLMs, NLP, MachineLearning, DeepLearning, Hyperparameters, DecodingParameters, AI, Optimization]

📌 Comprehensive Guide to Decoding Parameters and Hyperparameters in Large Language Models (LLMs)

Image Credit: [Your Source]

📖 Introduction

Large Language Models (LLMs) like GPT, Llama, and Gemini are revolutionizing AI-powered applications. To control their behavior, developers must understand decoding parameters (which influence text generation) and hyperparameters (which impact training efficiency and accuracy).

This guide provides a deep dive into these crucial parameters, their effects, and practical use cases. 🚀

🎯 Decoding Parameters: Shaping AI-Generated Text

Decoding parameters impact creativity, coherence, diversity, and randomness in generated outputs. Fine-tuning these settings can make your LLM output factual, creative, or somewhere in between.

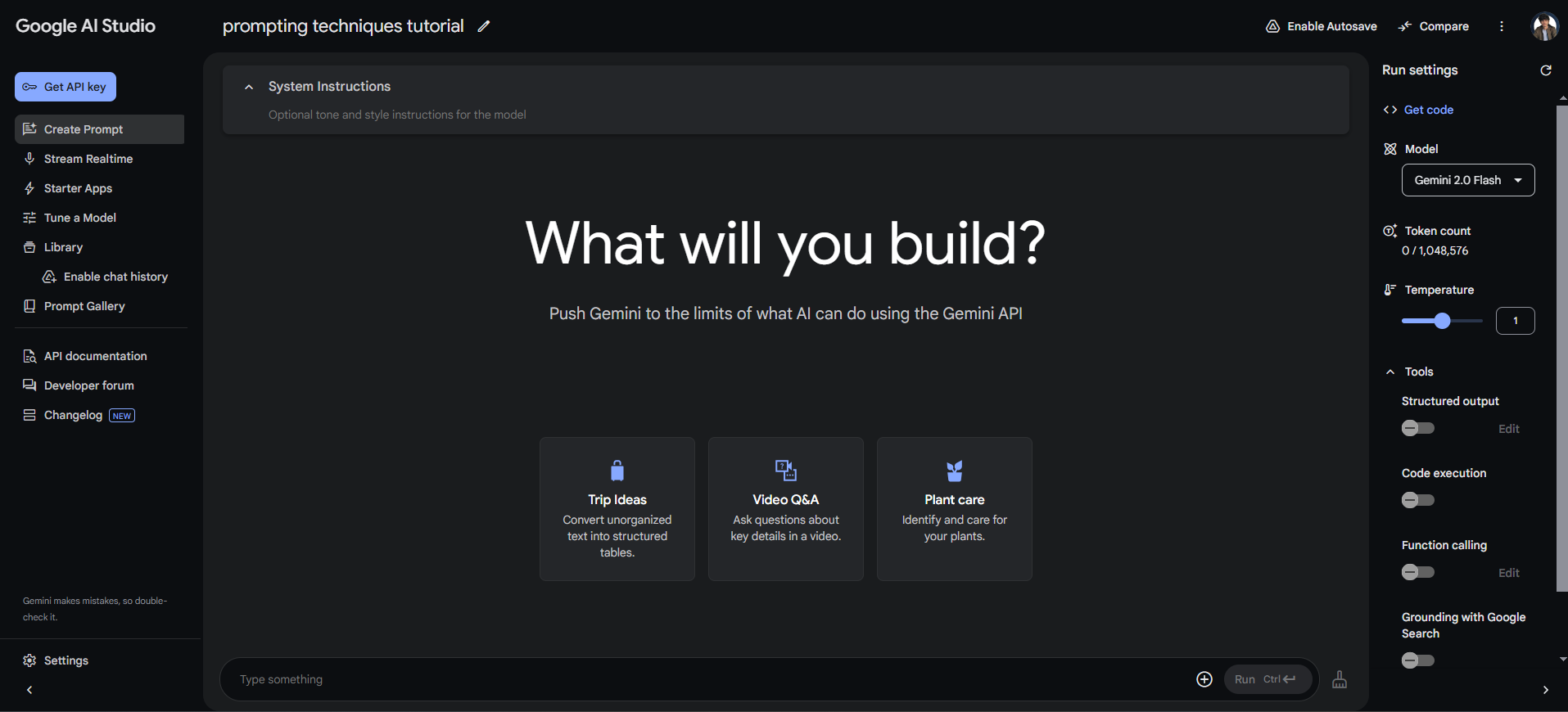

🔥 1. Temperature

Controls randomness by scaling logits before applying softmax.

| Value | Effect |

Low (0.1 - 0.3) | More deterministic, focused, and factual responses. |

High (0.8 - 1.5) | More creative but potentially incoherent responses. |

✅ Use Cases:

Low: Customer support, legal & medical AI.

High: Storytelling, poetry, brainstorming.

model.generate("Describe an AI-powered future", temperature=0.9)

🎯 2. Top-k Sampling

Limits choices to the top k most probable tokens.

| k Value | Effect |

Low (5-20) | Deterministic, structured outputs. |

High (50-100) | Increased diversity, potential incoherence. |

✅ Use Cases:

Low: Technical writing, summarization.

High: Fiction, creative applications.

model.generate("A bedtime story about space", top_k=40)

🎯 3. Top-p (Nucleus) Sampling

Selects tokens dynamically based on cumulative probability mass (p).

| p Value | Effect |

Low (0.8) | Focused, high-confidence outputs. |

High (0.95-1.0) | More variation, less predictability. |

✅ Use Cases:

Low: Research papers, news articles.

High: Chatbots, dialogue systems.

model.generate("Describe a futuristic city", top_p=0.9)

🎯 4. Additional Decoding Parameters

🔹 Mirostat (Controls perplexity for more stable text generation)

mirostat = 0(Disabled)mirostat = 1(Mirostat sampling)mirostat = 2(Mirostat 2.0)

model.generate("A motivational quote", mirostat=1)

🔹 Mirostat Eta & Tau (Adjust learning rate & coherence balance)

mirostat_eta: Lower values result in slower, controlled adjustments.mirostat_tau: Lower values create more focused text.

model.generate("Explain quantum physics", mirostat_eta=0.1, mirostat_tau=5.0)

🔹 Penalties & Constraints

repeat_last_n: Prevents repetition by looking at previous tokens.repeat_penalty: Penalizes repeated tokens.presence_penalty: Increases likelihood of novel tokens.frequency_penalty: Reduces overused words.

model.generate("Tell a short joke", repeat_penalty=1.1, repeat_last_n=64, presence_penalty=0.5, frequency_penalty=0.7)

🔹 Other Parameters

logit_bias: Adjusts likelihood of specific tokens appearing.grammar: Defines strict syntactical structures for output.stop_sequences: Defines stopping points for text generation.

model.generate("Complete the sentence:", stop_sequences=["Thank you", "Best regards"])

⚡ Hyperparameters: Optimizing Model Training

Hyperparameters control the learning efficiency, accuracy, and performance of LLMs. Choosing the right values ensures better model generalization.

🔧 1. Learning Rate

Determines weight updates per training step.

| Learning Rate | Effect |

Low (1e-5) | Stable training, slow convergence. |

High (1e-3) | Fast learning, risk of instability. |

✅ Use Cases:

Low: Fine-tuning models.

High: Training new models from scratch.

optimizer = AdamW(model.parameters(), lr=5e-5)

🔧 2. Batch Size

Defines how many samples are processed before updating model weights.

| Batch Size | Effect |

Small (8-32) | Generalizes better, slower training. |

Large (128-512) | Faster training, risk of overfitting. |

train_dataloader = DataLoader(dataset, batch_size=32, shuffle=True)

🔧 3. Gradient Clipping

Prevents exploding gradients by capping values.

| Clipping | Effect |

Without | Risk of unstable training. |

With (1.0) | Stabilizes training, smooth optimization. |

torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm=1.0)

🔥 Final Thoughts: Mastering LLM Tuning

Optimizing decoding parameters and hyperparameters is essential for: ✅ Achieving the perfect balance between creativity & factual accuracy. ✅ Preventing model hallucinations or lack of diversity. ✅ Ensuring training efficiency and model scalability.

💡 Experimentation is key! Adjust these parameters based on your specific use case.

📝 What’s Next?

🏗 Fine-tune your LLM for specialized tasks.

🚀 Deploy optimized AI models in real-world applications.

🔍 Stay updated with the latest research in NLP & deep learning.

🚀 Loved this guide? Share your thoughts in the comments & follow for more AI content!

📌 Connect with me: [ GitHub | LinkedIn]

#LLMs #NLP #MachineLearning #DeepLearning #Hyperparameters #DecodingParameters #AI #Optimization